AI-Engineering

What are we doing?

In the rapidly evolving landscape of artificial intelligence, the integration of Large Language Models (LLMs) with software engineering has become increasingly critical. As AI systems grow more sophisticated and pervasive, we are at the forefront of addressing the complex challenges that emerge from this convergence. Our research group is deeply committed to advancing the state-of-the-art in AI engineering, with particular focus on cutting-edge areas including model modularization, model retrieval, model security, and multi-agent collaboration.We maintain active engagement with the international research community, regularly contributing to top-tier conferences and journals.

Recent Updates

- Oct, 2025 Our paper "ModularEvo: Evolving Multi-Task Models via Neural Network Modularization and Composition" has been accepted by ICSE'26 research papers track.

- Jul, 2025 Our paper "Backdoor Defense via Enhanced Splitting and Trap Isolation. International Conference on Computer Vision" has been accepted by ICCV'25.

- Jul, 2025 Our research paper "NeMo: A Neuron-Level Modularizing-While-Training Approach for Decomposing DNN Models" has been accepted by ACM Transactions on Software Engineering and Methodology (TOSEM).

- May, 2025 Our paper "CABS: Conflict-Aware and Balanced Sparsification for Enhancing Model Merging" has been accepted by ICML'25.

- Jul, 2024 Our paper "FedEvalFair: A Privacy-Preserving and Statistically Grounded Federated Fairness Evaluation Framework" has been accepted by MM'24.

- Feb, 2024 Our ICSE'24 paper "Modularizing while Training: A New Paradigm for Modularizing DNN Models" won ACM SIGSOFT Distinguished Paper Award.

- Nov, 2023 our paper "Reusing Convolutional Neural Network Models through Modularization and Composition" has been accepted by TOSEM as research paper.

- Aug, 2023 our paper "AutoMRM: A Model Retrieval Method Based on Multimodal Query and Meta-learning" has been accepted by the CIKM'23.

- Jun, 2023 our paper "Modularizing while Training: A New Paradigm for Modularizing DNN Models" has been accepted by the ICSE'24 research papers track.

- Dec, 2022 Our paper "Reusing Deep Neural Network Models through Model Re-engineering" has been accepted by the ICSE'23 technical track.

- April, 2022 Our paper "Patching Weak Convolutional Neural Network Models through Modularization and Composition" has been accepted by the ASE'22 research papers track.

Current Projects

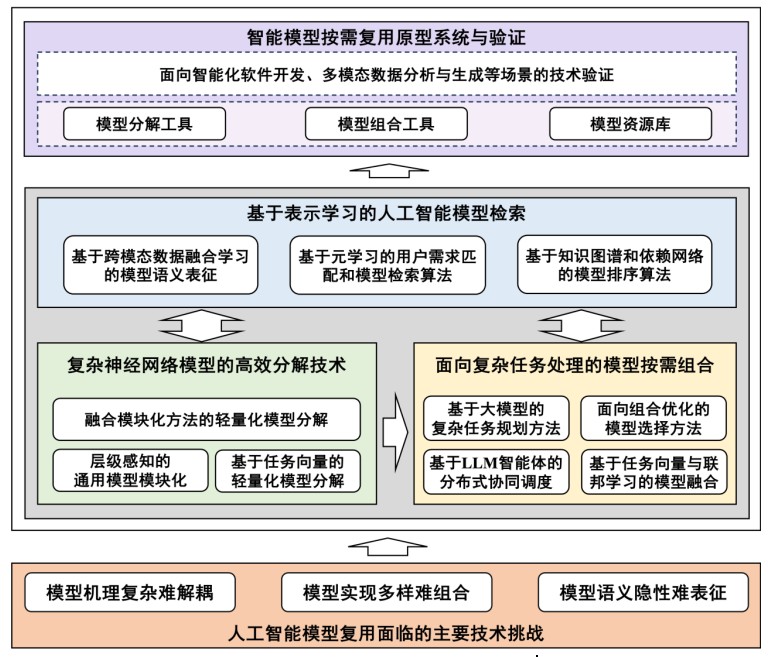

AI Model Reuse

AI models are increasingly treated as reusable software components, yet both large-scale pretraining and task-specific fine-tuning face limitations for efficient reuse at scale. We advocate a modular "decompose + compose" paradigm to enable on-demand reuse and improve the development efficiency of intelligent software. Given an application task and a pool of candidate models, we address three key problems: selecting appropriate reuse granularity to avoid unnecessary overhead; composing multiple models to solve complex tasks beyond a single model’s capability; and accurately retrieving and matching relevant models. We focus on model modularization, model composition, and model retrieval, and will develop practical methods and tools to enable efficient model reuse across domains.

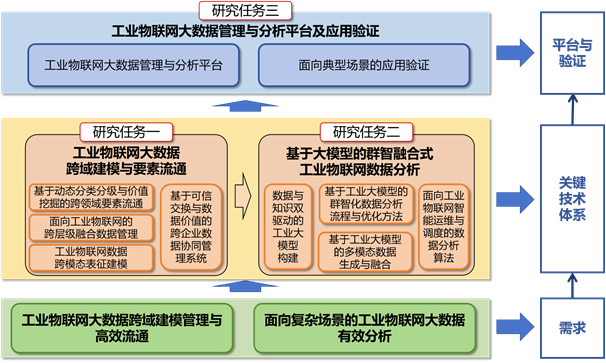

Multimodal Big Data Modeling and Analysis for IoT

This project focuses on multimodal big data modeling and analysis for Industrial Internet of Things (IIoT), addressing critical application challenges arising from cross-layer, cross-domain multimodal data in industrial IoT systems. The main challenges include difficulties in modeling and management due to multimodal data complexity, lack of industrial mechanisms in large models, and insufficient generalization capabilities of small models leading to ineffective analysis. The project aims to tackle two fundamental scientific questions: "How to improve data circulation capabilities in Industrial IoT through hierarchical modeling management" and "How to enhance the effectiveness of Industrial IoT data analysis through collective intelligence fusion methods." We will break through six key technologies: cross-modal data representation modeling, cross-layer data fusion management, cross-domain data tiered pricing, dual-driven industrial large model construction combining data and knowledge, collective intelligence fusion data analysis based on industrial large models, and intelligent operation and scheduling algorithms for Industrial IoT. The project will develop an Industrial IoT big data management and analysis platform, with application validation across five typical aerospace industry scenarios. This comprehensive approach will significantly advance the state-of-the-art in industrial IoT data processing and analysis capabilities.

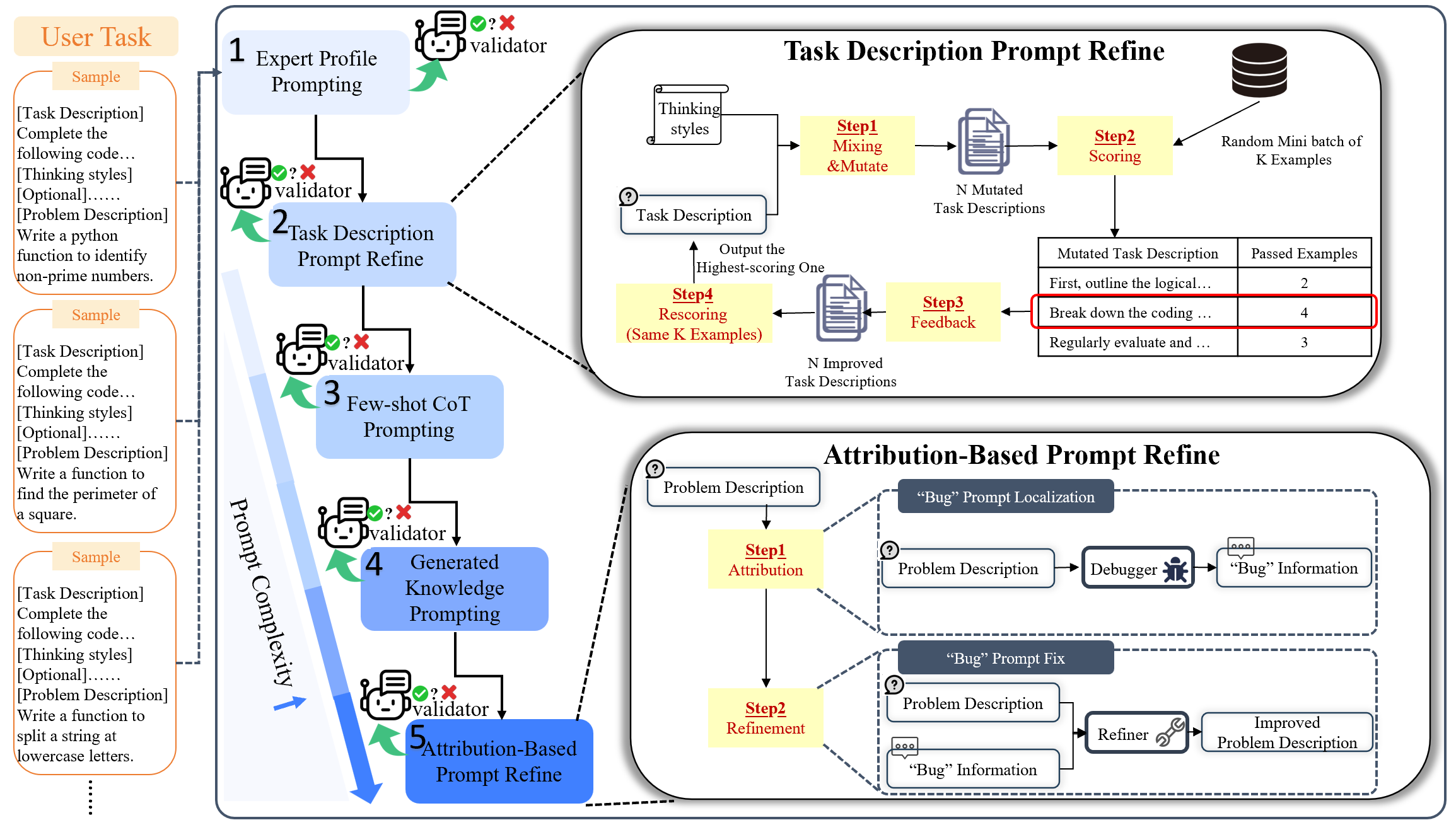

Prompt Debugging and Optimization for LLMs

In recent years, Large Language Models (e.g., GPT-4, Llama 3, Code Llama) have demonstrated strong capabilities in content generation, translation, and question answering, becoming a foundational technology in AI. However, model outputs are highly sensitive to prompts: the quality of prompt design directly affects generation quality, while the relationship between prompts and outputs is complex and difficult to interpret. Currently, prompt debugging largely depends on iterative trial-and-error and practitioner experience, lacking systematic methods for analysis and optimization, which results in low efficiency and high costs. Compared with traditional programming—where mature debugging toolchains exist—the field of prompt debugging still lacks well-established techniques. This project aims to study efficient and interpretable methods for prompt debugging and optimization, in order to improve the performance of LLM applications and accelerate their broader deployment.

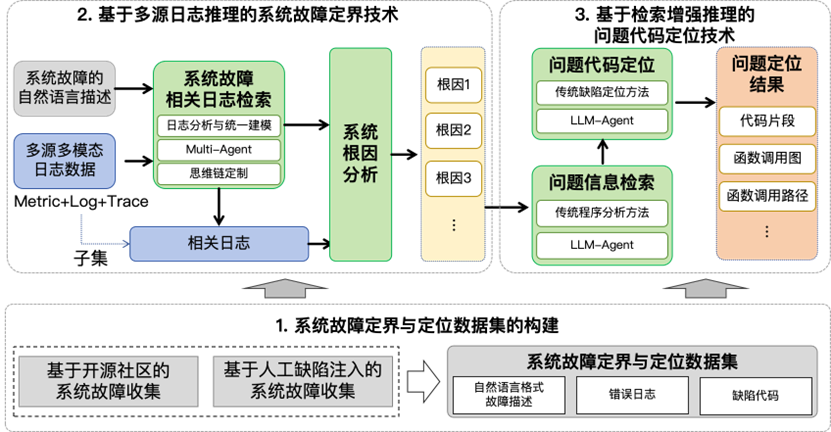

Root Cause Analysis and Localization of Software Failures

Modern systems generate massive, heterogeneous logs that are essential for diagnosing failures but difficult to analyze reliably by hand. With the rise of Large Language Models (LLMs), we investigate intelligent fault scoping and localization by combining multi-source log reasoning and code analysis. We will: (1) build an end-to-end evaluation dataset covering problem descriptions, system logs, and code snippets; (2) develop multi-source log reasoning methods that integrate traditional log modeling with multi-agent LLM reasoning to retrieve fault-related logs and analyze root causes, precisely mapping failures to system modules with clear explanations; and (3) propose retrieval-augmented reasoning for code-level localization, leveraging classic program analysis (e.g., test-based fault localization) to gather evidence, retrieving relevant program knowledge, and using LLM agents to synthesize a final answer as faulty code snippets or call graphs/paths.

Publications

Selected Research Papers

-

ModularEvo: Evolving Multi-Task Models via Neural Network Modularization and Composition

Wenrui Long, Binhang Qi, Hailong Sun, Zongzhen Yang, Ruobing Zhao, Xiang Gao.The 48th IEEE/ACM International Conference on Software Engineering (ICSE) 2026.

-

Backdoor Defense via Enhanced Splitting and Trap Isolation

Hongrui Yu, Lu Qi, Wanyu Lin, Jian Chen, Hailong Sun, Chengbin Sun.International Conference on Computer Vision (ICCV), 2025.

-

NeMo: A Neuron-Level Modularizing-While-Training Approach for Decomposing DNN Models.

Xiaohan Bi, Binhang Qi, Hailong Sun, Xiang Gao, Yue Yu, Xiaojun Liang.ACM Transactions on Software Engineering and Methodology (TOSEM), 2025.

-

CABS: Conflict-Aware and Balanced Sparsification for Enhancing Model Merging

Zongzhen Yang, Binhang Qi, Hailong Sun, Wenrui Long, Ruobin Zhao, Xiang Gao.The 42nd International Conference on Machine Learning (ICML), 2025.

-

Towards Open-World Domain Adaptation via Iteratively Contrastive Learning and Clustering

Jingzheng Li, Hailong Sun, Jiyi Li, Pengpeng Chen, Shikui Wei.IEEE Transactions on Neural Networks and Learning Systems, 2025.

-

FedEvalFair: A Privacy-Preserving and Statistically Grounded Federated Fairness Evaluation Framework

Zhongchi Wang, Hailong Sun, Zhengyang Zhao.The 32nd ACM International Conference on Multimedia (MM), 2024.

-

ModelGalaxy: A Versatile Model Retrieval Platform

Wenling Zhang, Yixiao Li, Zhaotian Li, Hailong Sun, Xiang Gao, Xudong Liu.The 47th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR) - Demonstration track, 2024.

-

Modularizing while Training: a New Paradigm for Modularizing DNN Models

Binhang Qi, Hailong Sun, Hongyu Zhang, Ruobin Zhao, Xiang Gao.The 46th IEEE/ACM International Conference on Software Engineering (ICSE), 2024.

-

RA3: Human-in-the-loop Framework for Interpreting and Improving Image Captioning with Relation-Aware Attribution Analysis

Lei Chai, Lu Qi, Hailong Sun, Jingzheng Li.The 40th IEEE International Conference on Data Engineering (ICDE), 2024.

-

Reusing Convolutional Neural Network Models through Modularization and Composition

Binhang Qi, Hailong Sun, Hongyu Zhang, Xiang Gao.ACM Transactions on Software Engineering and Methodology (TOSEM), 2024.

-

Target Structure Learning Framework for Unsupervised Multi-Class Domain Adaptation

Jingzheng Li, Hailong Sun, Lei Chai, Jiyi Li.ACM Transactions on Multimedia Computing Communications and Applications (TOMM), 2024.

-

AutoMRM: A Model Retrieval Method Based on Multimodal Query and Meta-learning

Zhaotian Li, Binhang Qi, Hailong Sun, Xiang Gao.The 32nd ACM International Conference on Information and Knowledge Management (CIKM), 2023.

-

Black-Box Data Poisoning Attacks on Crowdsourcing

Pengpeng Chen, Yongqiang Yang, Dingqi Yang, Hailong Sun, Zhijun Chen, Peng Lin.The 32nd International Joint Conference on Artificial Intelligence (IJCAI), 2023.

-

Learning from Noisy Crowd Labels with Logics

Zhijun Chen, Hailong Sun, Haoqian He, Pengpeng Chen.The 39th IEEE International Conference on Data Engineering (ICDE), 2023.

-

LiFT: Transfer Learning in Vision-Language Models for Downstream Adaptation and Generalization

Jingzheng Li, Hailong Sun.The 31st ACM International Conference on Multimedia (MM), 2023.

-

Neural-Hidden-CRF: A Robust Weakly-Supervised Sequence Labeler

Zhijun Chen, Hailong Sun, Wanhao Zhang, Chunyi Xu, Pengpeng Chen.The 29th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), 2023.

-

Reusing Deep Neural Network Models through Model Re-engineering

Binhang Qi, Hailong Sun, Xiang Gao, Hongyu Zhang, Zhaotian Li, Xudong Liu.The 45th IEEE/ACM International Conference on Software Engineering (ICSE), 2023.

-

Patching Weak Convolutional Neural Network Models through Modularization and Composition

Binhang Qi, Hailong Sun, Xiang Gao, Hongyu Zhang.The 37th IEEE/ACM International Conference on Automated Software Engineering (ASE), 2022.

ACM SIGSOFT Distinguished Paper Award

Our Team

Faculty

Students

Ph.D. Students

Zhongchi Wang

Ph.D. 2021

Federated Learning

Xiaohan Bi

Ph.D. 2023

DNN model modularization

Ruobing Zhao

Ph.D. 2023

LLM agent-based software defect demarcation and localization

Lu Qi

Ph.D. 2024

Machine Learning

Yuxiang Wang

Ph.D. 2024

RAG-based industrial big data analytics

Yixiao Li

Ph.D. 2024

Large model-based time series analysis

Hongrui Yu

Ph.D. 2024

AI security

Yuchen Liu

Ph.D. 2025

Large model merging and reuse

Huinan Zhang

Ph.D. 2025

Model retrieval based on model representations

Peng Sun

Ph.D. 2025

AI4CAE

Master's Students

Wenling Zhang

Master 2023

Model retrieval

Zongzhen Yang

Master 2023

Large model merging and reuse

Wenrui Long

Master 2023

Hao Gao

Master 2023

Data processing intelligent agent

Zhengyang Zhao

Master 2024

Haobo Xu

Master 2025

Hang Xu

Master 2025

Yuxin Chen

Master 2025

Intelligent CAE software

Kailin Si

Master 2025

Large model retrieval

Former Students

Binhang Qi

Ph.D. 2020

Move to NUS as Postdoc

Lei Chai

Ph.D. 2020

Zizhe Wang

Ph.D. 2017

Move to Qiyuan Laboratory

Pengpeng Chen

PhD

Moved to China Institute of Aeronautical Technology 501

Jing Wang

Master 2019

Moved to Bank of China